NGD Software and Algorithms

Software and Algorithms Development

The Next Generation Development (NGD) Software and Algorithms subproject includes a portfolio of research and development efforts that will impact E3SM in the one-to-five year time frame. This computationally-oriented subproject is specifically looking to make investments that will help the core Performance and Infrastructure Groups deliver on their production code responsibilities through the next versions of the E3SM model. This allows for development efforts that are longer-term, more focused, and riskier than can be undertaken by the E3SM Core groups (but tend to be less risky than is typical for the SciDAC portfolio).

This subproject will look to extend the programmatic role of the Climate Model Development and Validation (CMDV) Software Modernization (CMDV-SM) project. The high-level strategic vision for the subproject remains defined by the three themes of CMDV-SM:

- Improve the trustworthiness of the model as E3SM moves toward decision support missions.

- Improve the agility of the codebase and development process to respond to the ongoing disruption in computer architectures.

- Expand leverage of DOE computational science expertise and investments.

The proposed efforts include work to support a verification culture, expanded testing infrastructure, and several algorithmic and performance efforts to help developers with the bottleneck they encounter with strong scaling of an explicit code on new architectures.

Verification/Testing Infrastructure

Among the portfolio of tasks in this subproject, the team will continue their efforts started under CMDV-SM along theme number 1 (listed above) in formalizing the verification process for E3SM and expanding the verification effort. The team has made progress in defining and deploying a common infrastructure for running verification tests and injecting the results into the documentation. This software solution involves the use of Jupyter notebooks, which combine the ease of documentation of presentation software with the ability to execute commands to launch code and perform post-processing. The team’s vision is for the verification notebooks to live in the codebase, version controlled, so verification tests can be rerun on-demand. Under the current project, computer scientists will work to mature the infrastructure, so it can be broadly deployed to all active E3SM development efforts. Whether the team’s verification efforts find climate-changing bugs or not, the team will be gaining confidence in its implementation of the model and solution algorithms.

To date, developers have focused on atmospheric physics libraries, which appeared to be lagging behind the dycores in their verification and testing culture. The team would like to see some of the promising work in this area come to fruition, since the first results of a new interdisciplinary project that involved devising a common vocabulary and shared vision are just emerging. The team will continue verification work on the MAM aerosol library. In addition to the direct benefits to MAM, this work will serve as a prototype for work in other physics libraries by being an early adopter of the infrastructure described above.

The team has recently been growing its infrastructure to automate the running of sections of code that were not originally developed to run stand-alone, as a means to efficiently expand test coverage. In this task, scientists look to expand that effort to develop a streamlined workflow to create new tests by extracting representative performance-critical kernels and associated inputs from full E3SM model runs. Presently, this is a tedious manual process that is error-prone. To automate this workflow, developers will evaluate and extend capabilities of the following tools to fit the needs of E3SM: (a) KGen, a Python-based kernel extraction tool, and (b) Flang, a Fortran compiler targeting LLVM. This will create a pipeline of manageable kernels and mini-apps that can be used to populate a comprehensive set of unit tests as well as serve as targets for performance optimization. The team will design the workflow to ensure that optimized kernels can be drop-in replacements for their baseline counterparts.

Climate Reproducibility

As E3SM source code is adapted for exascale computing on DOE leadership computing facilities, the code often results in answers that are non-bit-for-bit reproducible. It is important to establish if these answer changes significantly impact model climate statistics. As a part of the CMDV-SM project, multivariate tests for high dimensions and low sample sizes (e.g. cross-match tests, energy tests borrowed from the machine learning and statistics community) have been adapted for the atmosphere model component to assess climate reproducibility after the introduction of a non-bit-for-bit change. These tests show promise and have been able to detect climate changing model behavior associated with aggressive compiler optimization choices (Mahajan et al., 2017). Researchers are extending the development of these robust tests to include verification of other model components, primarily the ocean model, as well as for individual software kernels and libraries.

The multivariate tests involve generating an ensemble (by perturbing initial conditions by machine precision) of short simulations for the control and the perturbed model, and assessing the climate statistics after the perturbations to see if the initial conditions have propagated through the nonlinear system. For the atmosphere, short ensembles (less than one year) have been found to be sufficient to evaluate their noise statistics for the purposes of model verification. For model components with dominant variability on much longer time-scales (ocean and ice), researchers will assess what simulation duration would be sufficient to capture the noise statistics of the system necessary for climate reproducibility tests. The tests will be deployed in the E3SM testing system. For additional information, see the E3SM news feature story titled “Can We Switch Computers? An Application of E3SM Climate Reproducibility Tests“.

Barotropic Solver for Semi-Implicit Ocean

Algorithm choices in some E3SM components are limiting scalability of the integrated system. In the ocean model, the solution of the fast barotropic mode limits throughput and scalability. The previous DOE ocean model (POP) solved the barotropic mode using a semi-implicit scheme. This scheme converged very slowly and required global reductions on each of the 100s of solver iterations. For that reason, the MPAS-Ocean developers chose to implement an explicit sub-cycling approach to the fast barotropic mode. Unfortunately, subcycling frequently transfers small, latency-dominated messages (halo updates) for each sub-cycled step and remains a limiter for scalability. Subcycling also retains some undesirable stability and consistency issues. Recent developments encourage scientists to revisit semi-implicit approaches. On the system side, Summit’s fat-tree network has a much smaller bisection bandwidth than Titan (lower latencies), and new “SHARP” collectives that process on the network switch for significantly faster MPI Allreduces. Together, these significantly reduce the penalty for algorithms with global dependence and make a semi-implicit solution more attractive computationally. Advances in high-performance solvers and preconditioners will improve the convergence properties and reduce iteration counts. Developers will leverage ECP’s ForTrilinos (a Fortran interface to Trilinos solvers) to access advanced solver and preconditioner libraries to create a scalable and fast semi-implicit barotropic solver option. The team has included Trilinos linear solver experts on the project, one of whom developed an exceptionally fast and scalable preconditioner for E3SM’s ice sheet velocity solver (Tuminaro, 2016). Due to the prior semi-implicit implementations in ocean models, developers are confident that a new optimal implementation in MPAS could be achievable in the near term and enhance performance of the E3SM model.

Atmospheric Chemistry Solver

In this task, the team is looking to capitalize on algorithmic improvements in the atmospheric chemistry nonlinear solver to speed up the solution and increase robustness. The second-order Rosenbrock (ROS-2) solver has already been implemented in the CAM4 atmosphere model, and has achieved about two times speedup compared to the original first-order implicit solver. The most expensive component of the previous solver was frequent updates to the Jacobian matrix and LU factorization. The ROS-2 solver utilizes the same Jacobian matrix and LU factorization structure during the two-stage calculations, saving computation time. A long-term simulation (i.e., 10-year run) shows that this computational speedup is stable. Accuracy is also improved. Unlike current models that show strongly overestimated surface ozone concentration over the conterminous U.S., bias of surface ozone concentration is clearly reduced with the ROS-2 solver. Given that the chemistry computation is like a box model in each grid cell, the ordinary differential equations can be solved independently on a GPU and developers expect additional computational gains using the Summit machine at the Oak Ridge Leadership Computing Facility (OLCF). The team will apply the ROS-2 solver to the chemistry solver in the E3SM Atmosphere Model, and develop it to use the GPU on Summit.

IMEX for Nonhydrostatic Atmosphere Dynamics

Members of the team have recently developed a nonhydrostatic atmospheric dycore, the nonhydrostatic High Order Method Modeling Environment (HOMME-NH), for high resolution simulations expected in the v3 and v4 science campaigns. In this small research effort, scientists will look to improve the performance and scalability of HOMME-NH by developing a low-storage, stage-parallel implicit-explicit (IMEX) Runge-Kutta (RK) time-integrator. The equations of motion for the nonhydrostatic atmosphere support vertically propagating acoustic waves that necessitate the use of an IMEX RK method for efficient time-integration in HOMME-NH. IMEX RK methods require the solution of a nonlinear system of equations at each implicit stage. Development of a stage-parallel IMEX RK method where the nonlinear equations arising at each implicit stage, or some subset of implicit stages, can be solved in parallel, can shorten the run-time of HOMME-NH. The proposed work is to develop a low-storage and stage-parallel IMEX RK method with a large stability region to improve the performance, scalability and memory requirements.

In contrast to the focus on on-node optimizations of much performance work, the following two tasks attack the inter-node (MPI) communication costs that are particularly significant at the strong-scaling, high-throughput limit.

Reducing MPI Communication in Semi-Lagrangian Transport

In the E3SM atmosphere dynamical core, tracer transport has a large computational cost. Previously, in the LEAP-T project, researchers have developed methods and prototyped software for mass conserving, shape preserving, consistent, semi-Lagrangian (SL) tracer transport, and demonstrated speedup over the current spectral element (SE) method of 2.5x for the case with 40 tracers and at the strong-scaling limit of 1 element/core. But the current MPI communication pattern is suboptimal for SL methods because it communicates information from the full halo of neighboring elements. By first calculating and communicating which subset of elements within the halo need to communicate with the reference element, and only sending that subset of data, the communication volume will be significantly reduced. For larger time steps, this would be a 3x reduction of communication volume over the current SL implementation and a 9x reduction over the v1 SE code.

Task Placement for Faster MPI Communication

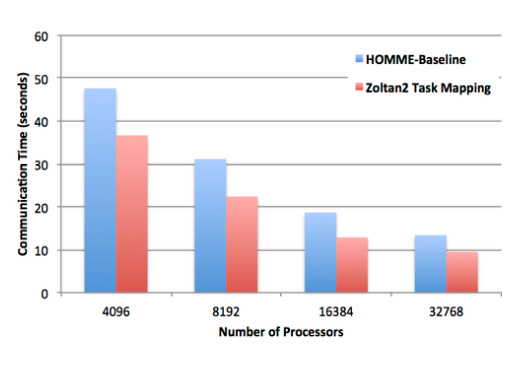

Zoltan2’s geometric task mapping reduces the communication time of HOMME up to 31% by placing interdependent tasks on “nearby” processors of the IBM BG/Q platform Mira.

Task mapping – the assignment of a parallel application’s MPI tasks to the processors of a parallel computer – is increasingly important as the number of computing units in new supercomputers grows. The goal is to assign tasks to cores so that interdependent tasks are performed by “nearby” cores, thus lowering the distance messages must travel, the amount of congestion in the network, and the overall cost of communication. To accomplish this, the task mapping algorithm needs to know the compute nodes assigned by the machine’s queuing system as well as the underlying network topology. By querying the computer for this information, which is possible for the main DOE compute platforms, developers can exploit existing geometric partitioning algorithms to find an improved mapping. When this is done at the beginning of a run, a simple permutation of the MPI rank IDs is all that is needed to impose the improved task mapping. In a previous project, developers interfaced the task mapping algorithms in the Zoltan2 library to the HOMME atmosphere dycore, with a striking 31% reduction in communication time when running on 32K cores of the IBM Blue Gene Q machine, Mira, at Argonne Leadership Computing Facility (ALCF), as shown in the figure above (Devici et al., 2018).

The team will extend the task placement work to other components of the E3SM model, such as the MPAS components. Researchers will also work to deploy task mapping to the full coupled model, to improve the placement of tasks in one component (e.g., atmosphere) relative to those in another (e.g., ocean) which are numerically coupled. The task is staffed with one of the main developers of the new MOAB-based coupler as well as members of the Zoltan2 library team (whose work in this area is partially supported by the FASTMath SciDAC Institute). By bringing the Zoltan2 team and software into E3SM, developers also open the door to further impact in the area of combinatorial algorithms, including improved mesh partitioning.