Can We Switch Computers? An Application of E3SM Climate Reproducibility Tests

The historical and current-climate simulations for E3SM’s contribution to Phase 6 of the internationally-coordinated Coupled Model Intercomparison Project (CMIP6) were run on a supercomputer called Edison at the National Energy Research Scientific Computing Center (NERSC). Edison was decommissioned in May 2019, so future projections for CMIP6 will need to be run on a different computer. The most likely replacement is another supercomputer at NERSC called Cori, which raises an uncomfortable question: will the difference between simulated past and future climates be due to greenhouse gases… or due to changing computers? Thanks to the Climate Model Development and Validation Software Modernization (CMDV-SM) project, E3SM developers now have three distinct methods to answer this and other questions about the reproducibility of a climate simulation.

Background

Exact numerical reproducibility – the requirement that two simulations configured the same way give identical results that match to the last digit of each number – is very useful during the development and testing of a model. Maintaining exact reproducibility whenever new optional features are added or code is modified for computational performance or legibility reasons safeguards against unintended behavioral changes. The ability to exactly re-run a crashed simulation with more diagnostic output is also a huge help for debugging.

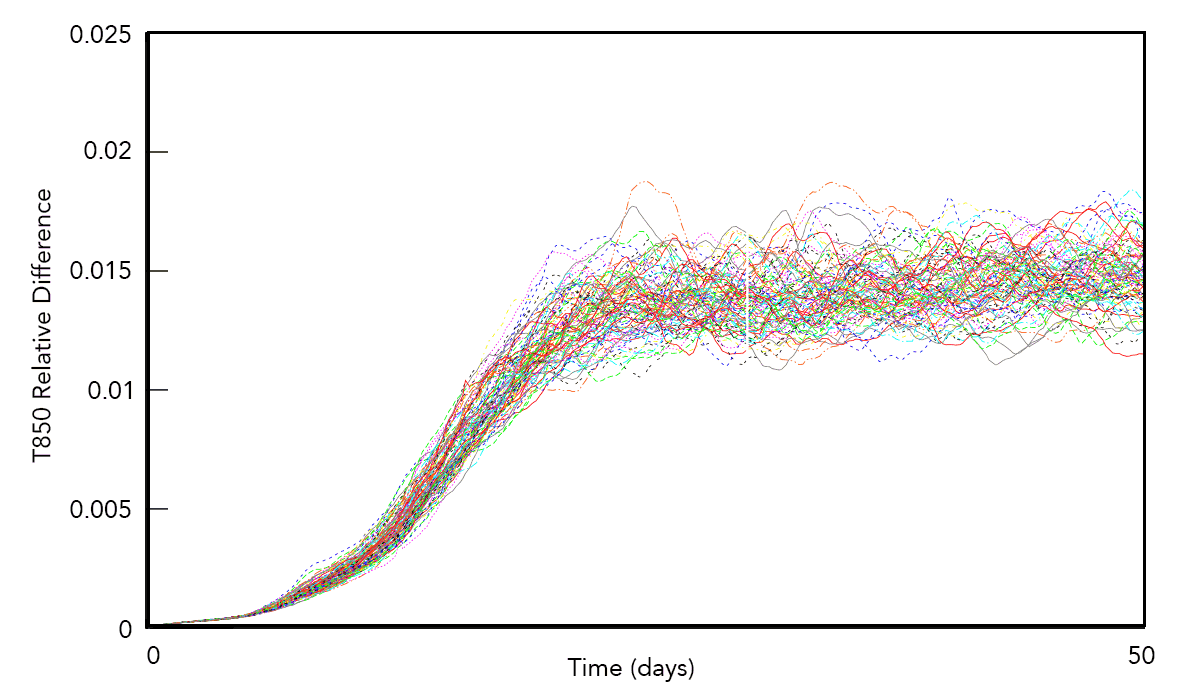

Growth of differences in 850 mb temperature (in Kelvin, using the L1 norm) between each pair of simulations in a 100-member ensemble. Adapted from Mahajan et al., 2017 ICCS.

But exact reproducibility can be tedious to achieve. It also imposes strong limitations on the optimizations permissible by compilers. For example, E3SM scientist Noel Keen found that turning off some of the reproducibility optimization flags sped up E3SM by 5-10%. There are also some situations where exact reproducibility will simply be impossible to achieve. Changing computer hardware (e.g. between Edison and Cori as mentioned above) is one such case. Another example has to do with newer computer architectures that use many targeted, low-power processing units to reduce power consumption while enhancing computational speed. To achieve higher performance, these architectures might give up on maintaining a strict order of computation each time code is executed. Because computers can only store a finite number of digits of any number, changing the order of computation for theoretically equivalent calculations yields a slightly different answer, a phenomenon known as non-associativity. Because atmospheric motions are inherently chaotic, these tiny differences will grow quickly as shown in the figure above. After a week or two, the trajectory of individual storms will diverge, rain will fall in different locations and at different intensities, and weather will generally be different between pairs of simulations. The long-term statistics of the meteorological state, however, will remain the same, and those statistics are what climate scientists care about. To date, determining whether the climate of two E3SM simulations are statistically equivalent has required experts’ empirical evaluation of a large number of climate variables.

Description of Tests

The CMDV-SM project changes this situation by providing three methods for evaluating whether changes in the simulation results affect model climate. All of these methods use ensembles of simulations from both the original and modified codes to compute the statistical probability that the two versions of the code are the same. These tests include:

- The Kolmogorov-Smirnov test evaluates the null hypothesis that the probability distribution function of global annual averages of variables of the two ensembles, each with 30 members of one-year simulations, are statistically identical.

- The perturbation growth test first quantifies the characteristic rate of divergence between two trusted simulations that differ only in roundoff-level initial perturbations. The test then evaluates the null hypothesis that a new ensemble and a trusted ensemble, each containing 12 one-time-step simulations, are statistically identical.

- The time-step convergence test makes use of the fact that very short E3SM atmosphere simulations show the trend of self-convergence when the model time steps are shortened while everything else is kept the same. This test evaluates whether a new code or computing environment produces results that still converge to the same trusted reference.

Among the three methods, the first approach directly addresses the climate statistics that E3SM developers care about. The other two methods use the model’s behavior from short simulations (with lengths from one to a few hundred time steps) as proxies for long-term behavior. These latter two tests are faster to run but they do not directly assess the simulated climate.

The Verdict: Cori and Edison are Statistically Indistinguishable

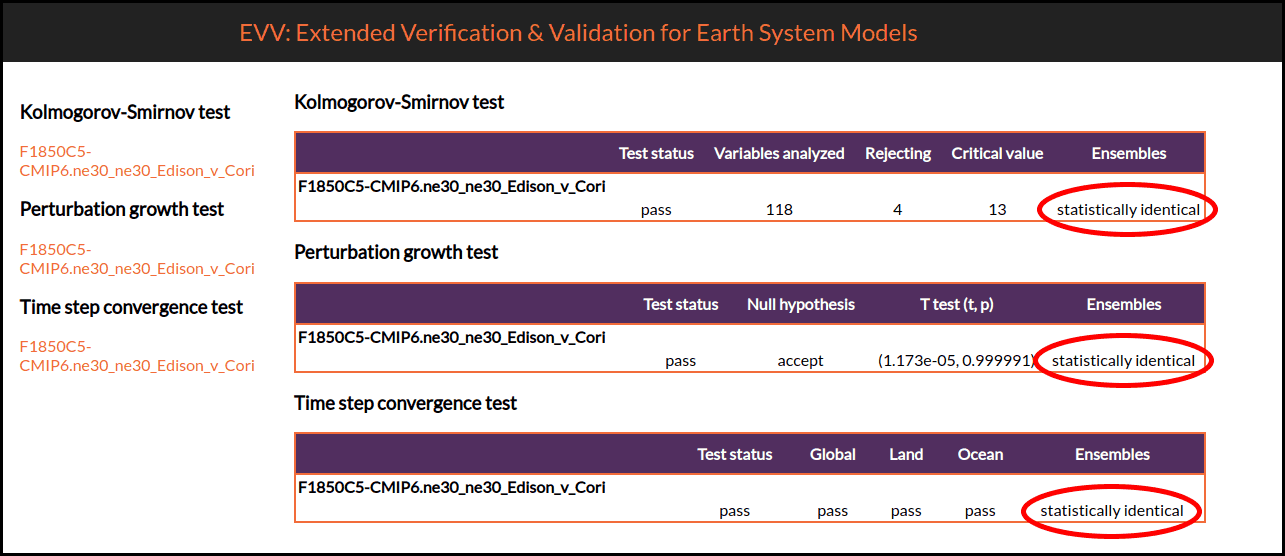

Although the CMDV-SM team has tested the three approaches on a number of idealized case studies, the retirement of Edison was these methods’ first big test. The team used all three methods to compare simulations on Edison and Cori. As shown in the figure below, all methods found Cori and Edison to indeed produce statistically indistinguishable results. The fact that three very different approaches all support the same conclusion provides strong evidence that CMIP6 simulations can be continued on Cori without fear of machine-induced climate differences.

After running the suite of climate reproducibility tests, a portable website is created which shows the status of each test and provides links to detailed graphics, tables, and information related to each test. For the Cori vs. Edison comparison shown here, ensembles on both machines are found to be statistically identical by all tests.

Availability and Implications

Further comparisons of the different methods are planned, but all three tests are available for use on the master branch of E3SM now. For instructions on running the tests, see this GitHub page. The CMDV-SM team is particularly excited about the possibilities these tests create for improving computational performance. Switching the order of computations can often improve speed, but also can change answers. Because model climate had to be checked by hand by experts every time reproducibility was violated, exploring minor algorithmic changes to increase speed were impractical until now. At the moment, the tests only assess atmosphere model output. Work is underway, however, to extend the climate reproducibility test to include ocean output. The perturbation-growth and convergence tests could also be extended to other E3SM components.

Ability to test for climate differences between pairs of runs in an automated and statistically-rigorous way is a huge advance for E3SM. Not only do these climate reproducibility tests allow E3SM developers to perform production runs spread across multiple machines (e.g. Edison and Cori, as described above), it also drastically reduces the testing burden for a wide class of code changes.

Credits

Salil Mahajan (ORNL), Balwinder Singh (PNNL), and Hui Wan (PNNL) created the Kolmogorov-Smirnov, Perturbation Growth, and Time Step Convergence tests (respectively). Joe Kennedy (ORNL) integrated these tests into the E3SM testing suite and created the website for viewing test results. Peter Caldwell (LLNL) orchestrated the implementation efforts, and Andy Salinger (SNL) was the principal investigator of the CMDV-SM project.

Publications

Mahajan, S., K. J. Evans, Joe Kennedy, M. L. Branstetter, M. Xu, M. Norman (2019): “A multivariate approach to ensure statistical reproducibility of climate model simulations”, In Proceedings of the Platform for Advanced Scientific Computing (PASC), June 12-14, 2019, Zurich, Switzerland, ACM, New York, NY, USA, Article 14. https://doi.org/10.1145/3324989.3325724

Mahajan, S., K. J. Evans, Joe Kennedy, M. L. Branstetter, M. Xu, M. Norman (2019): “Ongoing solution reproducibility of earth system models as they progress toward exascale computing”, Special Issue for Computational Reproducibility at Exascale Workshop 2017, Super Computing 2017 in International Journal of High Performance Computing Applications. https://doi.org/10.1177/1094342019837341

Mahajan, S., A. L. Gaddis, K. J. Evans and M. Norman (2017): “Exploring an ensemble-based approach to atmospheric climate modeling and testing at scale”, Procedia Computer Science, 108, 735-744. https://doi.org/10.1016/j.procs.2017.05.259

Singh, B., Rasch, P. J., Wan, H., Ma, W., Worley, P. H., and Edwards, J.: “A verification strategy for atmospheric model codes using initial condition perturbations”, in preparation for Geoscientific Model Development (GMD), 2019.

Wan H., K. Zhang, P.J. Rasch, B. Singh, X. Chen, and J. Edwards. 2017. “A new and inexpensive non-bit-for-bit solution reproducibility test based on time step convergence (TSC1.0).” Geoscientific Model Development 10, no. 2:537-552. PNNL-SA-118076. https://doi.org/10.5194/gmd-10-537-2017

Contact: Peter Caldwell, Lawrence Livermore National Laboratory