Long Term Roadmap

To achieve the grand challenge of actionable Earth system simulations and projections to address the most critical challenges facing our nation and DOE, the E3SM project defined its strategy in a roadmap with four intersecting project elements:

- Major simulations. A series of simulation-and-projection experiments addressing mission needs with actionable scientific results.

- Model development. A well-documented, tested, continuously improving system of model codes that comprise the E3SM Earth system model.

- Leadership architectures. The ability to use effectively leading (and “bleeding”) edge computational facilities soon after their deployment at DOE national laboratories.

- Infrastructure. An infrastructure to support code development, hypothesis testing, simulation execution, and analysis of results.

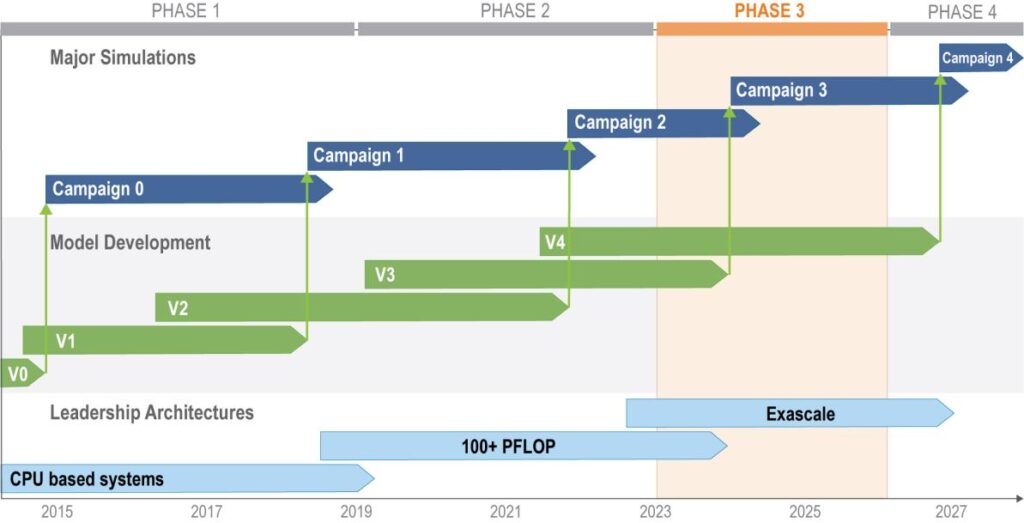

Figure 1 depicts the E3SM Project Roadmap including Phases 1-3 of the original ~10-year plan and Phase 4, the beginning of the project in the next 10 years. The roadmap shows the relationships among the first three major project elements: the simulations, the modeling system to perform those simulations, and the machines on which they will be executed. Unlike the other three elements that have distinct but overlapping phases, the fourth element, the infrastructure, will evolve continuously based on the requirements imposed by project needs.

Element 1: Major Simulations

The need for DOE-relevant, actionable, scientific results underpins our science questions and simulations. Our focus at the project’s outset was three critically important Earth-system science drivers that strongly influence⎯and are influenced by⎯the energy system: the water cycle, biogeochemistry, and the cryosphere system. To align our science drivers more explicitly with our goal of actionable modeling and projections of Earth system variability and change, while maintaining the foundational science on which they depend, we have redefined our new drivers as: (1) Water Cycle Changes and Impacts; (2) Human-Earth System Feedbacks; and (3) Polar Processes, Sea Level Rise, and Coastal Impacts. These new, more explicit drivers highlight specific aspects of the Earth system that connect our model development and simulation campaigns to challenges facing our Nation and DOE.

The ensemble simulations of the coupled human-Earth system at ultra-high resolution that we envisioned from our three-pronged strategy to address our grand challenge are not yet possible with current model and computing capabilities. Nevertheless, for each version of our modeling system, we developed a set of achievable experiments that make major advances toward our grand challenge goal by running the model on leadership computing architectures available to the project during each phase. This way, each model version is used in a “Simulation Campaign” consisting of major simulations to address the science questions of each science driver. Our simulation results have been used to refine science questions and develop new testable hypotheses to be addressed with subsequent versions of the modeling system. As shown in Figure 1, E3SM simulation campaigns are performed over four to five years, each with successive versions of the modeling system. Every campaign informs the next. We envision four campaigns with successive versions (0-3) of the modeling system leading to the simulations that address our original long-term goal in approximately 10 years. Simulation Campaign 4 using E3SM v4 marks the beginning of the next 10 years featuring transformative model capabilities we envision for the next-generation model, a multi-resolution, GPU-enabled coupled human-Earth system model.

Element 2: Model Development

The core of the E3SM project is science-driven model development. Priorities are determined by the needs to accurately simulate the processes and overall behaviors of the Earth’s water cycle changes and impacts, human-Earth system feedbacks, and sea-level rise and coastal impacts by the fully coupled modeling system. The Model Development element connects the science needs with computing power provided by the DOE Office of Science (DOE-SC). E3SM is the only Earth system modeling project to target DOE Leadership Computing Facilities (LCFs) as its primary computing architectures. The E3SM model development path includes three development cycles for its modeling system over ~10 years. The staged nature of model (Figure 1) recognizes that multiple versions are undergoing different stages of development and testing at any given time. With the release of Version 1 (v1) of the model in April 2018, the project switched model development to an open-source development approach, in which the developmental version of the code is available to everyone. The project has committed to fully documenting and testing each major model release, including benchmarking runs for performance and control simulations for scientific evaluation. E3SM has completed its Version 2 (v2) modeling system, which is being used in the v2 experiment campaign, as well as the v2 model code documentation, simulation configurations, and model output from an initial set of runs. The Version 3 (v3) experimental campaign, which will be described in subsequent sections, served as the starting point for v3 development priorities during Phase 3, just as the Version 4 Science Questions motivate the early v4 development priorities.

Element 3: Leadership Architectures

We cannot overemphasize the interdependence of the E3SM project and DOE LCF resources. High-end scientific computing has been relatively evolutionary and predictable since the adoption twenty-five years ago of massively parallel, microprocessor-based, distributed memory supercomputers. From the late 1980s through the late 1990s, these systems, such as the Cray T3E and IBM-SP, ultimately replaced the large, special-purpose, shared-memory vector systems that were the mainstay of many scientific communities, including weather and Earth-system simulation. That disruptive decade required Earth system modelers to experiment with different architectures and programming models to sustain progress.

Analogously, high-end scientific computing has entered a new disruptive period. As microprocessors have become ever smaller, they are approaching limitations dictated by power consumption and heat generation, requiring new and experimental processor designs that are the building blocks for the next generations of high-end computers. Achieving grand-challenge science goals necessitates that the E3SM project lead the Earth system modeling community to adapt to unprecedented challenges in the computing landscape, including slower system clock speeds and increased software complexity driven by computing system heterogeneity.

In the US, the hardware path to Exascale is now settled and will be GPU-based. We will see two GPU-based Exascale systems during Phase 3 of the project in addition to the existing pre-exascale GPU systems already installed at the leadership computing facilities (LCFs) and at NERSC. The two Exascale systems⎯OLCF’s Frontier and ALCF’s Aurora⎯are based on AMD and Intel GPUs, respectively, while the pre-exascale systems⎯e.g., OLCF’s Summit and NERSC’s Perlmutter⎯are based on NVIDIA GPUs. In E3SM, our main goal is to ensure that E3SM is ready to achieve grand challenge science goals across all of these GPU based systems. We have adopted performance portable approaches designed to work efficiently across all of these GPU architectures as well as maintaining E3SM’s excellent performance on traditional CPU architectures.

Supporting current and upcoming next-generation DOE machines will continue to require a mix of major code refactoring (rewriting with new code), ongoing optimization, and longer-term research in algorithms and computational approaches.

Element 4: E3SM Infrastructure

The priority science drivers and resulting three-year experiments were used to define the functionality of the initial simulation system. Infrastructure design is based on the requirements to facilitate hypothesis-testing workflows (configuration, simulation, diagnostics, analysis). As mentioned above, the infrastructure element is continuously evolving and does not have the distinct phasing of the other three elements. The infrastructure maintains a disciplined software engineering structure and builds turnkey workflows to enable efficient code development, testing, simulation design, experiment execution, analysis of output, and distribution of results within and outside the project. Since the E3SM model system is available to users outside of the project, the infrastructure includes the documentation and software repositories expected of open-source software systems.

Project Phasing and its Relationship to Model Versions

Administratively, DOE has supported the E3SM project under the Scientific Focus Area structure, which requires a full proposal and complete peer-review every three to four years. We designate each period covered under a single proposal as a project “Phase.” During each phase, the project develops and runs multiple versions of the E3SM system. For example, during Phase 2 (2018-2022), we developed E3SM v2 and ran simulations using both the v1 and v2 models. Many model features that we developed during Phase 2 under the “next generation development (NGD) subprojects” are being integrated and tested in the v3 model. This proposal outlines the E3SM Phase 3 (2023-2025) activities, including completion of the v2 simulations, development of E3SM v3 and associated simulations driven by the v3 science questions, and development of features for integration to the v4 model to be used in major simulations in Phase 4 from 2026 to 2028.