Introduction to EAMxx and its Superior GPU Performance

Over the past 4 years, E3SM has built a new global nonhydrostatic atmosphere model aimed at superior performance on the upcoming hybrid architectures of DOE’s Leadership Computing Facilities (LCFs) like Theta, Summit, Frontier, and Aurora (Bertagna et al., 2020, Taylor et al., 2020). The E3SM Atmosphere Model in C++ (EAMxx) is written in C++ using the Kokkos library to run efficiently on CPUs, GPUs, and (hopefully) whatever architectures come next. This effort was originally focused on very high resolution and was known as the Simple Cloud-Resolving E3SM Atmosphere Model (SCREAM). Because this model is now being extended to work at conventional resolutions, EAMxx is a more fitting name. The name SCREAM will continue to be used to refer to cloud-resolving configurations of the EAMxx code. This article reports on the first simulations with EAMxx, which were performed over the last few months. Because low-resolution extensions to EAMxx (e.g. deep convection and gravity wave drag parameterizations) are not available yet, all simulations reported below use the SCREAM configuration.

EAMxx/SCREAM Climate Skill

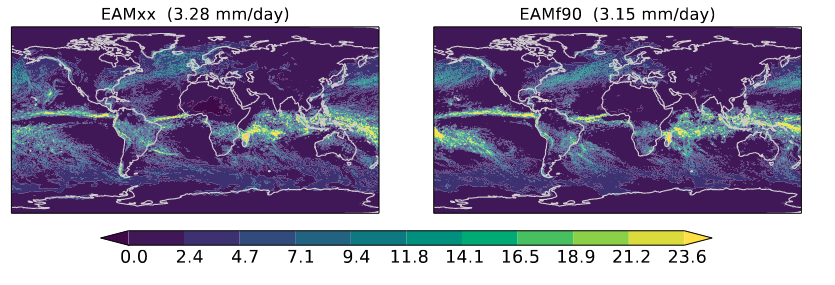

EAMxx was developed by modifying the existing EAM Fortran code (hereafter EAMf90) to work well at cloud-resolving resolutions, then using the resulting prototype as a blueprint for porting to C++ and for initial exploration of the climate of the SCREAM configuration. Comparison between Fortran and C++ code was a key tool for ensuring EAMxx is bug-free and achieves our performance goals. Figure 1 demonstrates that EAMxx and EAMf90 produce very similar climates. Evaluation of individual processes was even more stringent: the output of each C++ process in the v1 model is confirmed to be bit-for-bit identical with the corresponding Fortran implementation when run with low levels of optimization (Bertagna et al., 2019). The full C++ and Fortran models aren’t bit-for-bit identical because the C++ process coupler is completely different and therefore not exactly comparable with the Fortran version.

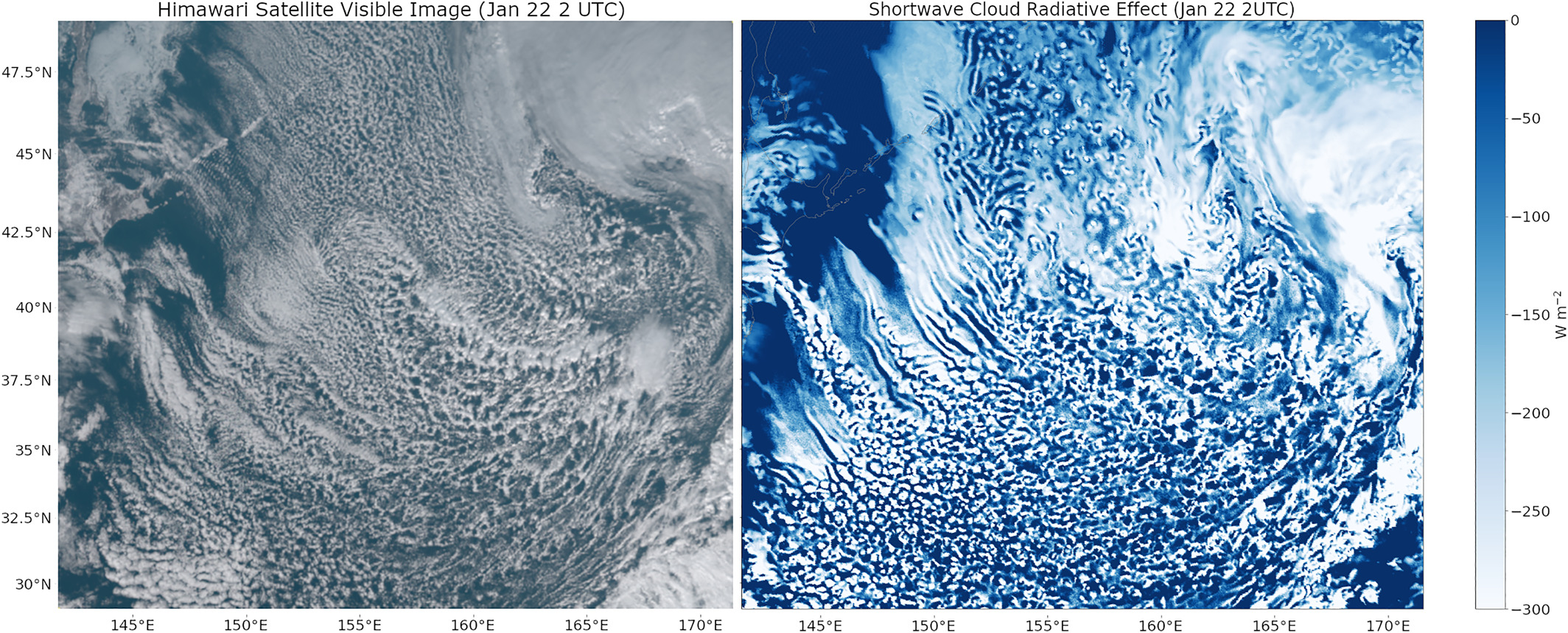

Because EAMxx and EAMf90 are so similar, simulations using the EAMf90 prototype provide a useful preview of EAMxx climatology. As noted in this previous article, a 40-day EAMf90-SCREAM run was extensively analyzed in Caldwell et al., (2021) and contributed to the DYnamics of the Atmospheric general circulation Modeled On Non-hydrostatic Domains (DYAMOND) intercomparison project (DYAMOND Initiative ). SCREAM was found to do a great job of reproducing important weather phenomena, particularly the diurnal cycle of tropical precipitation, the vertical structure of tropical convection, and subtropical stratocumulus. Figure 2 provides a comparison of a cold-air outbreak off the coast of Siberia in SCREAM and in observations. EAMf90-SCREAM was also used to simulate the 2012 North American Derecho, a particularly violent type of windstorm associated with midlatitude thunderstorms. SCREAM did at least as good a job reproducing this storm as the well-established WRF regional model, which has been used operationally to forecast these storms for more than a decade (Liu et al., 2022).

EAMxx Computational Performance

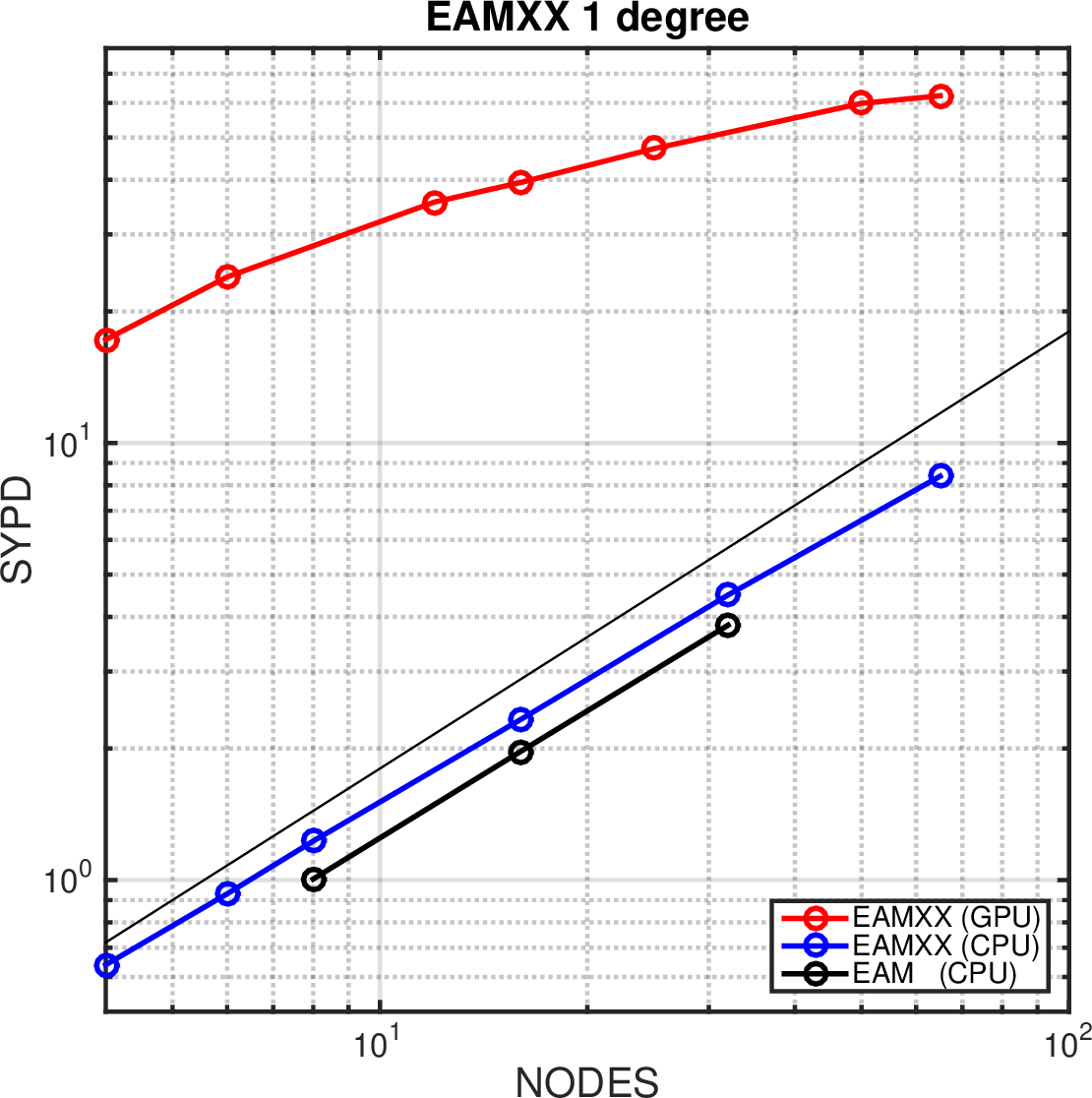

Figure 3. Strong scaling of the full EAMxx atmosphere model at low resolution (110 km, 1 deg), showing simulated-years-per-day (SYPD) as a function of Summit nodes. Performance is shown for the Fortran and C++ versions on CPUs (black and blue curves, respectively) and the C++ code (red curve) on GPUs. Perfect scaling is denoted by the solid line with no tickmarks. The CPU runs use 42 MPI tasks per node, while the GPU runs use 6 MPI tasks per node accessing 6 GPUs per node.

The motivation for recoding the model in C++ is improved performance, so it is gratifying to see that EAMxx is 8-30x faster than EAMf90 (Figure 3). Even on CPUs, the C++ code is faster than the original Fortran version; this reflects better coding since there’s no reason for C++ to be faster than Fortran. In addition to comparing simulation speed for different model versions for a given amount of computing resources, Figure 3 allows us to assess strong scaling: the speedup from increasing processor count at fixed model resolution. Relatively low resolution (110 km) was chosen for this graphic to make it easy to sample configurations where there are more computational elements available than there is work to be done. Until this limit is met, the CPU version of the code scales almost perfectly. GPUs thrive on having more work per node, so aren’t expected to scale as well.

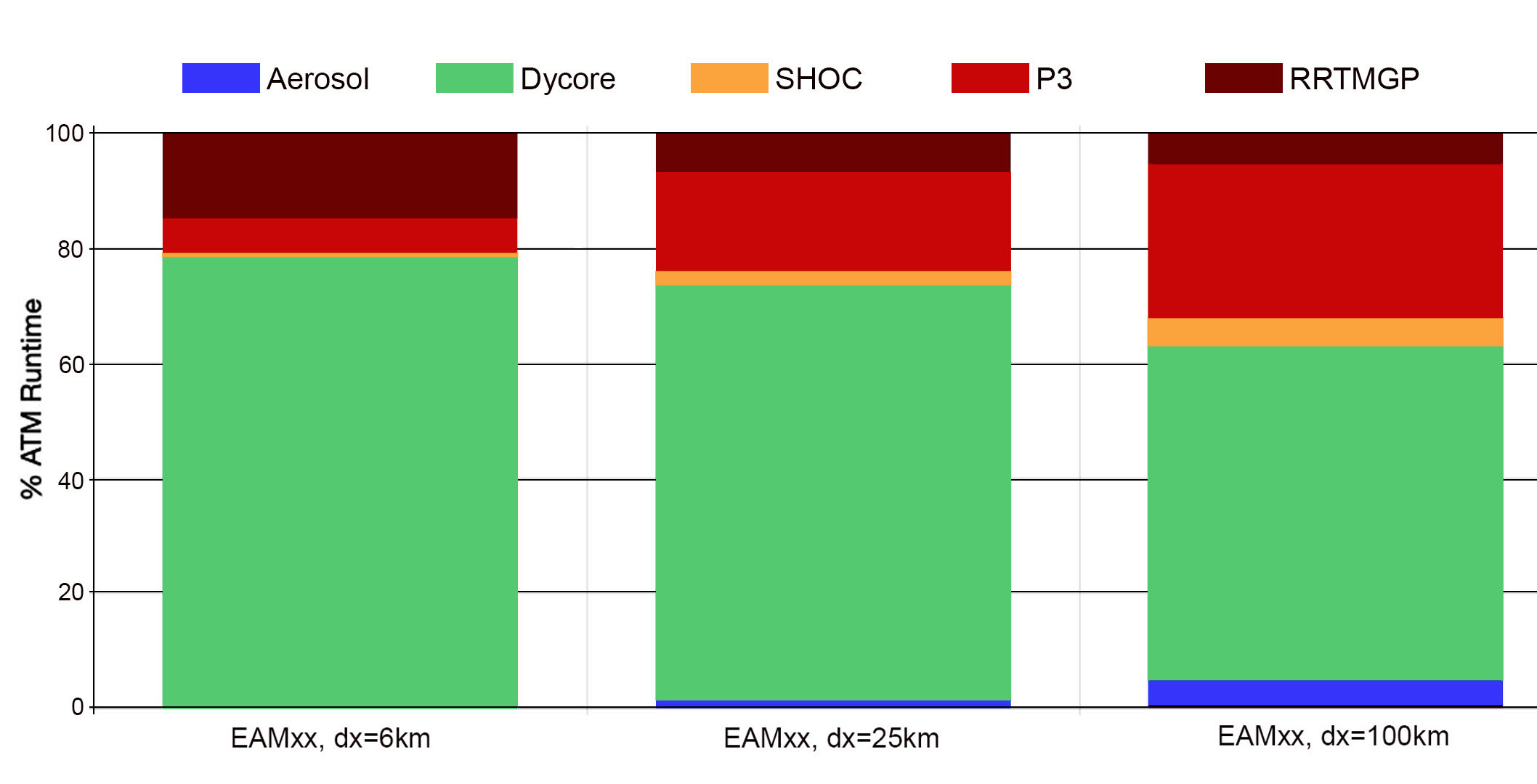

For the target 3.25 km resolution, there are no strong scaling results yet for the full model, but the EAMxx dynamical core (dycore) runs at 1.38 SYPD using 4096 nodes of Summit’s ~4600 total nodes. Since the dycore is the most expensive process in E3SM and gets more expensive as resolution increases (Figure 4), the full model should get close to 1 SYPD using all of Summit.

Figure 4: Fraction of time spent in each process for EAMxx at dx=6 km, 25 km, and 100 km (from left to right). Green is dycore, blue is prescribed aerosol, orange is SHOC, red is P3, and dark red is RRTMGP. Original graphic created by PACE (PACE – Home).

Publications

- L. Bertagna., M. Deakin, O. Guba, D. Sunderland, A. M. Bradley, I. K. Tezaur, M. A. Taylor, and A. G. Salinger. Hommexx 1.0: A performance portable atmospheric dynamical core for the energy exascale earth system model. Geoscientific Model Development, 12(4), 2019, DOI: 10.5194/gmd-12-1423-2019.

- L. Bertagna, O. Guba, A. M. Bradley, M. A. Taylor, S. Rajamanickam, J. G. Foucar, and A. G. Salinger. A performance-portable nonhydrostatic atmospheric dycore for the energy exascale earth system model running at cloud-resolving resolutions. In SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, 2020, DOI: 10.1109/SC41405.2020.00096

- P. M. Caldwell, C. R. Terai, B. Hillman, N. D. Keen, P. Bogenschutz, W. Lin, H. Beydoun, M. Taylor, L. Bertagna, A. M. Bradley, T. C. Clevenger, A. S. Donahue, C. Eldred, J. Foucar, J.-C. Golaz, O. Guba, R. Jacob, J. Johnson, J. Krishna, W. Liu, K. Pressel, A. G. Salinger, B. Singh, A. Steyer, P. Ullrich, D. Wu, X. Yuan, J. Shpund, H.-Y. Ma, and C. S. Zender. Convection-permitting simulations with the E3SM global atmosphere model. J. Adv. Modeling Earth Systems, 13(11), Nov. 2021, DOI: 10.1029/2021MS002544

- W. Liu, P. Ullrich, J. Li, C. M. Zarzycki, P. M. Caldwell, L. R. Leung, and Y. Qian. The June 2012 North American Derecho: A testbed for evaluating regional and global climate modeling systems at cloud-resolving scales. Submitted J. Adv. in Modeling Earth Syst., 2022, DOI: 10.1002/essoar.10511614.1.

Reference Article

Contact

- Peter Caldwell, Lawrence Livermore National Laboratory

This article is a part of the E3SM “Floating Points” Newsletter, to read the full Newsletter check: