Experimental High-Resolution Land Model Run on GPU Devices

One challenge for Earth system modelers is that land processes vary at spatial scales much finer than the current highest-resolution model configurations. In other words, interactions of the physical climate system with human systems such as agriculture, managed forests, dwellings, road and energy infrastructure, managed water resources, and urban environments all occur at spatial scales too small to be represented explicitly within the current high-resolution E3SM simulations. This is a significant problem, as process-level predictive understanding of these interactions is a basic requirement to inform analysis of policy options and decision-making.

Tackling this challenge, E3SM scientists have set an ambitious yet realistic goal which moves the project much closer to a policy-relevant scale: simulating land processes at a spatial resolution of 1 km x 1 km. This leverages the abundant data resources describing the historical and present-day distribution of land characteristics, which can be used to derive predictive models of Earth-human system interactions at fine spatial scales.

Moving the E3SM Land Model (ELM) to a 1 km grid presents unique computational challenges, however. While the land model is computationally relatively inexpensive, shifting from the current high-resolution grid spacing of about 0.25 degree (~28 km at the equator) to a resolution of 1 km results in a greater than 600 times increase in computational cost. Since predictive carbon and nutrient cycle processes are central to many policy-relevant modeling applications, scientists face a challenge in executing high-resolution ‘spinup’ simulations—in which the model is run for long periods until a steady state is reached—on current compute platforms. At the same time, compute architectures are shifting from CPU (central processing unit)-dominated platforms to much more heterogeneous configurations with CPUs and GPUs (graphical processing units), and multi-level memory hierarchies.

The objective of this work is to pioneer a 1 km gridded ELM implementation over the North American region, and demonstrate the ability to perform offline model spinup and historical period simulations on a hybrid CPU-GPU architecture. The team is targeting the Summit machine at the Oak Ridge Leadership Computing Facility (OLCF), designing and testing data and code configurations that make effective use of its GPU devices and memory architecture. One goal is to produce a “gold standard” set of offline simulations for the region, using the best possible high-resolution observational data drivers. These model results can then serve as a reference for coupled simulation results using high resolution or regionally-refined grids. A second goal is to prepare ELM for the next generation of high performance computing, which will include hybrid CPU/GPU architectures. E3SM scientists are taking a novel approach to porting ELM to GPUs, moving the entire model process calculation onto the GPU, and using the threading capability of the GPU devices to handle many thousands of land grid cells in parallel on each Summit node. Once we have demonstrated the new capability with offline land simulations, we hope to test in a coupled configuration using the North American regionally-refined modeling grids.

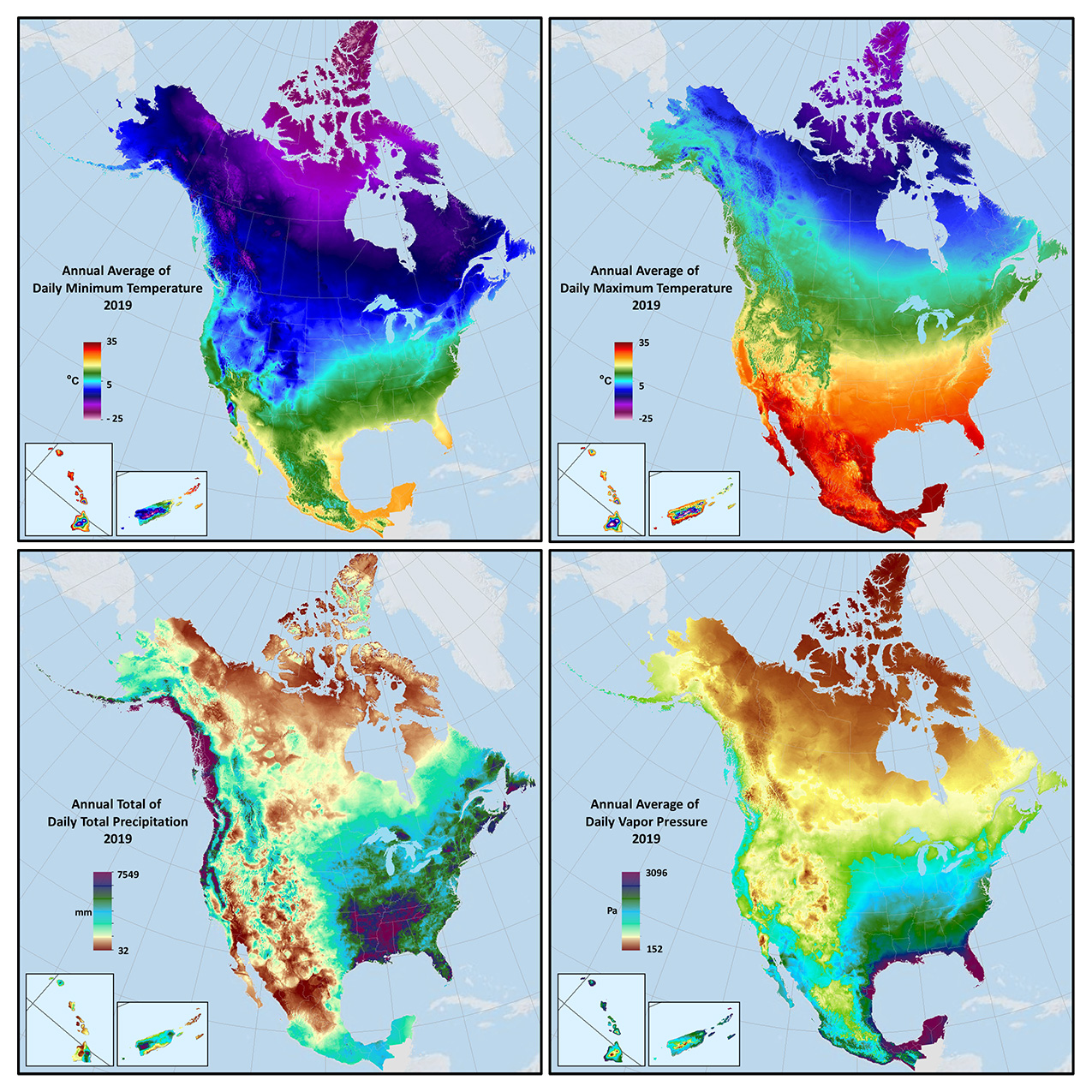

A critical need for the high resolution offline ELM simulations is a high-quality and long-duration surface weather dataset. Through E3SM support, the team has just published a new methodology (Thornton et al., 2021); the resulting dataset (Daymet4, Thornton et al., 2020) meeting the high-resolution ELM requirements, providing daily temperature, precipitation, humidity, and downward shortwave radiation over the entire North American domain at 1 km resolution for 1980-2020. Further E3SM support has resulted in methods for temporal downscaling of the daily data to 3-hourly inputs needed for offline ELM simulation (Kao et al., in prep). Based on surface weather observations and sophisticated 3-dimensional regression methods to capture horizontal and vertical gradients, Daymet v4 provides detailed geographic variation in critical model drivers (Figure 1). The methods and data products include comprehensive assessment of uncertainty (prediction error and bias) which can inform broader ELM parametric and structural uncertainty quantification efforts. Other high resolution model inputs such as soil texture and distribution of plant functional types have been assembled from existing data sources.

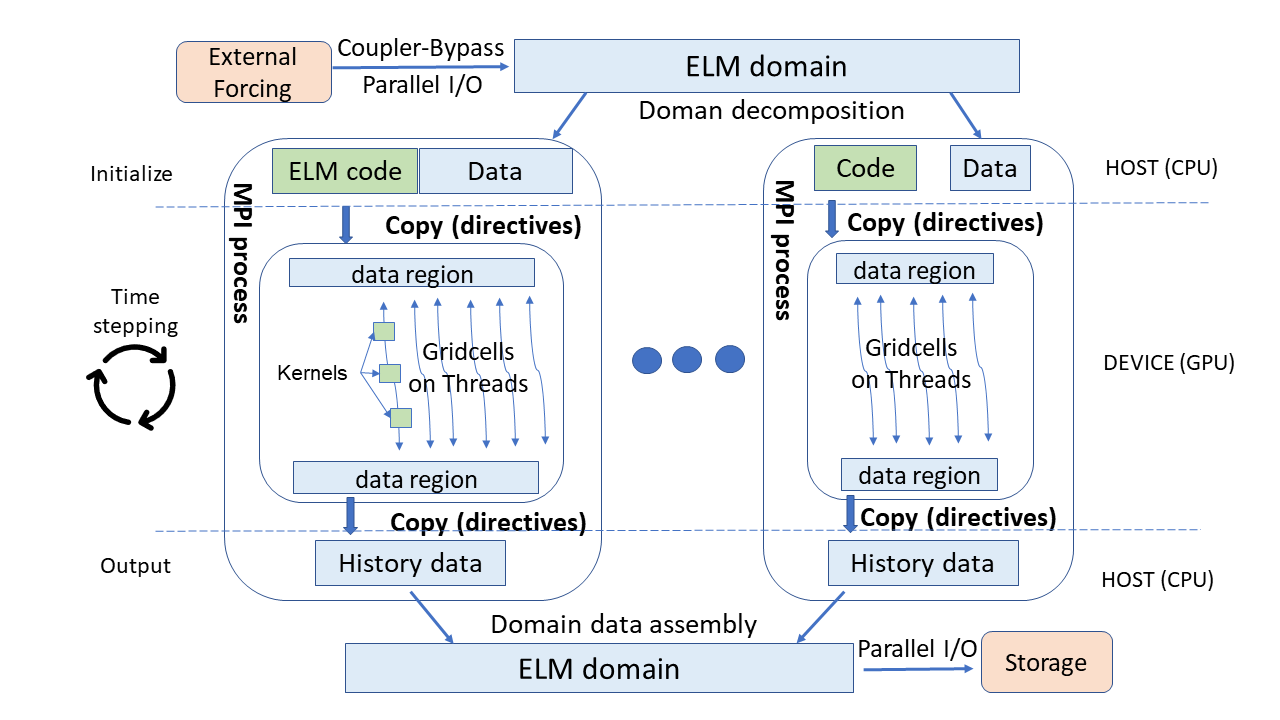

Figure 2. Implementation of ELM on a hybrid CPU/GPU architecture, showing an example for offline land model using external forcing data. In this implementation individual ELM grid cells are assigned to GPU threads, with multiple kernel launches across threads during each model timestep. Data and code stay resident on the GPU across multiple time steps, with data moved onto device as needed at initialization, and data moved off of device at history writing intervals.

On a technical level, the port of ELM to a hybrid CPU/GPU architecture is complete, and every subroutine has been rigorously tested for correctness using an automated functional unit testing framework. The team’s work required significant code restructuring. These architectural changes improved memory use and performance in the original CPU-only version of ELM, delivering approximately 10% speedup under conditions typical of a production run, and have been merged into the mainline model, benefitting the upcoming E3SM v2 simulation campaign.

The GPU-enabled code still resides on an experimental branch. Its GPU implementation uses OpenACC directives to move necessary data onto the GPU device, perform all science calculations for each model grid cell via multiple GPU kernels, and move data off the GPU to the CPU at required intervals for handling of history outputs (Figure 2). Recent performance tuning for the Summit architecture is resulting in the ability to execute about 4,000 ELM grid cells per GPU device, or about 24,000 grid cells per Summit node. MPI tasks are used to coordinate work across multiple nodes, with 6 MPI tasks per node, each one handling the operations on a single GPU device. The current implementation uses the available GPU resource fully, but uses only a small fraction of the CPU resource; the team expects this to change in the future. E3SM scientists are also exploring the use of OpenMP directives to handle the arrangement of data and kernel execution on GPUs, since the availability of OpenACC on future architectures is uncertain.

Currently the team is testing different domain decomposition approaches for dividing work across multiple nodes and GPU devices on Summit, and evaluating Input/Output (I/O) performance issues when operating the experimental branch on a large number of Summit nodes. The team has Director’s Discretionary allocation on Summit for this testing. Implementations of the GPU-enabled ELM at scale for the North American domain will take place in August 2021.

References

- Thornton, P. E., Shrestha, R., Thornton, M., Kao, S.-C., Wei, Y., & Wilson, B. E. (2021). Gridded daily weather data for North America with comprehensive uncertainty quantification. Scientific Data, 8(1), 190. https://doi.org/10.1038/s41597-021-00973-0

- Thornton, M. M., Shrestha, R., Wei, Y., Thornton, P. E., Kao, S.-C., & Wilson, B. E. (2020). Daymet: Daily Surface Weather Data on a 1-km Grid for North America, Version 4. ORNL DAAC. https://doi.org/10.3334/ORNLDAAC/1840

- Kao, S.-C. et al. (in prep). Temporal downscaling of Daymet v4 data to drive high-resolution land models.

Funding

- The U.S. Department of Energy Office of Science, Biological and Environmental Research supported this research as part of the Earth System Model Development Program Area through the Energy Exascale Earth System Model (E3SM) project.

Contacts

- Peter Thornton, Oak Ridge National Laboratory

- Peter Schwartz, Oak Ridge National Laboratory

- Dali Wang, Oak Ridge National Laboratory