Summary from SciDAC PI Meeting

After three years of virtual meetings, the Scientific Discovery through Advanced Computing (SciDAC) program held its Principal Investigators’ Meeting on September 12-14, 2023, in Rockville, Maryland. The meeting brought together principal investigators and project scientists from SciDAC5 projects across the Office of Science (Biological and Environmental Research (BER), Basic Energy Sciences, Fusion Energy Sciences, High Energy Physics, Nuclear Physics, and the Office of Nuclear Energy) partnered with the two SciDAC Institutes in the Advanced Scientific Computing Research (ASCR) program, FASTMath and RAPIDS. The BER program’s SciDAC partnership is focused on Earth System Model Development (ESMD). Seven new projects started in fall 2022, and this was the first opportunity for participants from those projects to meet and interact with the larger SciDAC community.

The importance of BER-ASCR SciDAC partnership to E3SM

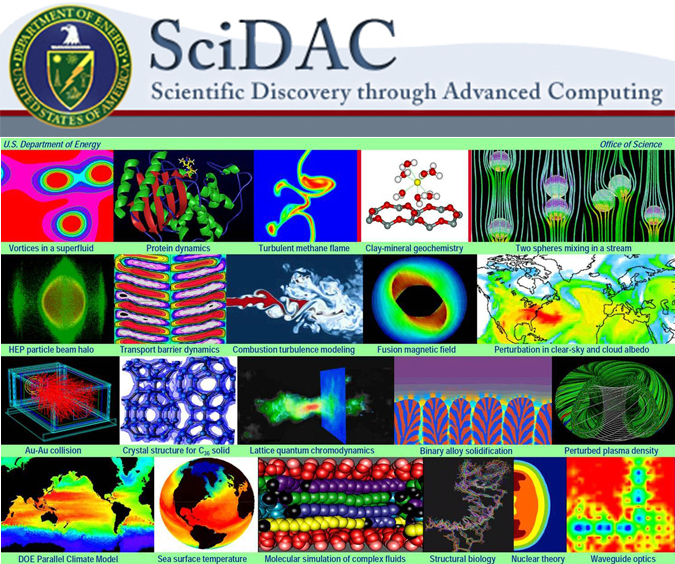

The plenary parts of the meeting included an overview of the breadth of the whole SciDAC program, with high-level summaries presented for both SciDAC Institutes and each of the six partnership programs. Also included were overviews of ASCR’s leadership computing facilities, discussions on SciDAC challenges and successes during the pandemic, discussions on the use of machine learning/artificial intelligence, as well as two program-wide poster sessions. In the overview presentation of BER-ASCR SciDAC Partnership, Xujing Davis, ESMD Program Manager, emphasized the critical importance of the SciDAC Partnership to BER’s E3SM advancement. She noted that each component and each version of E3SM has benefited greatly from SciDAC investment including substantial contributions to algorithms and performance. Even before the E3SM project started, SciDAC partnerships had contributed to the Earth system modeling science community substantially and broadly. One good example is the ice-sheet modeling effort, starting from ISICLES project in 2009 initiated by ASCR SciDAC Program Manager Lali Chatterjee at the time, to PISCEES (2012-2017), ProSPect (2017-2022) and current FAnSSIE (2022-2027) projects, through which DOE labs have been able to develop a world-class ice sheet modeling capability from scratch. In addition to enabling dynamic ice-sheets in E3SM, exploration of different computational approaches to ice-sheet modeling under the SciDAC partnership has advanced climate science more broadly, with two other climate models (the Community Earth System Model and the UK Earth System Model) adopting ice-sheet models developed under those earlier SciDAC efforts. As the E3SM project enters its 10th year and developing its new decadal strategic vision, the SciDAC partnership will continue to play a vital role in E3SM advancement, particularly its computational leadership.

BER-SciDAC Partnership Breakout

The BER-SciDAC partnership had its breakout session on day 2, which started with overview talks of the seven projects working within the E3SM ecosystem. For the second half of the session, Sarat Sreepathi, the BER Point-of-Contact on the SciDAC Coordination Committee briefly summarized the impact of collaborations among ASCR institutes and BER projects throughout SciDAC history and emphasized the evolution of computational tractability for earth science applications. Invited perspectives provided by representatives from both SciDAC Institutes, Ann Almgren (FASTMath) and Shinjae Yoo (RAPIDS), stimulated further discussions on interdisciplinary collaborations. Despite the well-known challenges related to language barriers and cultural differences across disciplines, participants from both the computational side and the Earth system science side expressed enthusiasm in the BER-SciDAC partnership as well as their appreciation of the open-mindedness of the participants.

In addition to providing an overview of FASTMath capabilities and partnerships with BER, Ann emphasized how each partnership has unique challenges and opportunities, and while software is an important aspect, successful collaboration also takes the form of sharing expertise. This takes time and open conversation, and the SciDAC program fosters this. Often what is considered “hard” or “easy” to an expert in one field is viewed differently by experts in another; not realizing this might lead to missed opportunities.

The need for software sustainability was emphasized especially in the context of application-aware algorithms and numerical method development and there was a brief discussion on the path forward with the end of Exascale Computing Project that provided partial support for such work.

Shinjae provided BER-focused examples for the RAPIDS capabilities areas of data understanding, platform readiness, data management, and artificial intelligence (AI). AI applications were discussed at length by the group, with a lot of interest in AI-generated surrogate models for reduced computational cost. There was further discussion on approaches for increasing trustworthiness of AI, including physics-constrained machine learning.

Cross-Discipline Discussion

The breakout also included an hour dedicated to group discussion on a series of cross-cutting topics including 1) Computational resources, 2) Multiscale representations and 3) Vision of computational climate science in 5 and 10 years, led by Matt Hoffman, Hui Wan and Sarat Sreepathi respectively.

Key Points from the three topics are summarized below with details elaborated thereafter:

- Computational resources: GPU utilization remains a challenge in Earth system modeling but many strategies are being identified through collaboration with computational scientists. Some of these strategies are leading to new scientific directions.

- Multiscale representations: Multiscale representation has been tackled by varying resolution or by embedding subgrid process models, each of which present tradeoffs. Evaluating the best solution for a given situation requires careful consideration on both the science and computational sides.

- Vision of computational climate science: Over the next decade, computational climate science must embrace new approaches and broaden its workforce.

- Computational resources: An ongoing challenge across SciDAC projects is effective utilization of the current generation of GPU-based supercomputers. The DOE leadership-class machines (OLCF, ALCF) provide an avenue for very high resolution simulations while limited capacity is available at NERSC to support large ensembles of low to medium resolutions. Scientifically, large ensembles have a lot of advantages over single “hero” simulations, but it is much harder to justify many small jobs in allocation requests and historically, these have not been successful. Some ideas discussed were stochastic models, fault-tolerant bundled ensembles, thinking beyond ensembles to utilize exascale, and lobbying for large capacity in addition to large capability allocation opportunities. AI/ML was discussed at length as one way to effectively utilize GPU resources. While it is becoming common to use AI/ML to generate cheaper surrogate models or parameterizations, there are also opportunities to use AI/ML to optimize simulation campaigns or workflows, provide resource management and manage load balancing. Superparameterization (i.e., a high resolution model embedded in the primary model as a subgrid parameterization) algorithms on the GPU side paired with standard resolution physics on the CPU side is a promising avenue for fully exploiting this generation of supercomputers while also capturing high resolution processes. In-situ analysis and visualization is another potential growth area for utilizing GPUs, but there was general agreement that this requires detailed consideration early in a simulation campaign design due to the natural desire to want to retain the opportunity to re-analyze any part of a simulation. Often the important aspects of a simulation to analyze are not known a priori. Perhaps AI/ML can identify those things during simulation.

- Multiscale representations: Representing processes across spatial scales is a common need in Earth system modeling and many other disciplines. One widely used approach is to combine different resolutions to explicitly resolve important features of vastly different scales; this can involve using multiple resolutions in a single simulation, multiple simulations or multiple model systems. Another typical approach is to use reduced models, e.g., parameterizations that are mechanistic descriptions of the physical processes or AI/ML-based surrogate models. It is not always obvious which of the two approaches is more suitable for specific processes or scales. Reduced models can have structural errors, while multi-resolution simulations can pose challenges to numerical solvers and load balancing. It was suggested that some of the methods used or developed in other disciplines might be worth considering, e.g., adaptive mesh refinement, multirate time integrators, mesh-free methods, and multiscale asymptotic methods. The Earth system is not only multiscale but also multifaceted due to the many quantities of interest to simulate. This poses challenges in the evaluation of numerical results, causing unavoidable levels of subjectivity in the assessment of the significance of solution changes. It was also emphasized again that, given the complexity of the Earth system modeling problem, open-mindedness is a key to fruitful interdisciplinary collaborations.

- Vision of computational climate science in 5 and 10 years: The paradigm shift from CPUs to GPUs is expected to continue with further on-node heterogeneity and disruption of computer architectures on the horizon. The participants expressed hope and interest in using AI/ML to act as a hedge and help complement (and not replace) explicit modeling, e.g., by augmenting parameterizations, injecting high-resolution variability, substituting computationally expensive model sub-components, tuning uncertain parameters, and aiding in model spin-up. Some of these may require enhancements of the current simulation workflow. Uncertainty Quantification (UQ) came up as another potential area for exploration – the development and application of advanced methods, including intrusive methods like reformulating the model equations from deterministic to stochastic, would require large-scale investments and could potentially benefit from support from the SciDAC program as well as expertise within FastMATH and RAPIDS.

Closely related to scientific vision is the topic of fostering a diverse workforce for Earth system model development and applications. It will be beneficial to provide a variety of opportunities to explore the field at different career stages, including more internships, fellowships, as well as targeted grant proposals. Recent initiatives announced by DOE include Promoting Inclusive and Equitable Research (PIER) Plans, Urban Integrated Field Laboratories (Urban IFL), Research Development and Partnership Pilot (RDPP), Reaching a New Energy Sciences Workforce (RENEW), National Virtual Climate Lab (NVCL), and Portal and Climate Resilience Centers (CRCs). It was also pointed out that more opportunities for scientists to learn to speak diverse languages would help make communications more effective for outreach activities.

Outlook

This year’s SciDAC Principal Investigators’ Meeting was the first meeting of the seven new E3SM-related SciDAC projects. It showcased vibrant and fruitful collaborations between SciDAC Institutes and climate scientists, and many new computational directions were identified, fostering further collaboration over the remaining four years of these projects, with long-lasting impacts to E3SM and earth system modeling science community well beyond the project life span.

All talks in plenary and breakout sessions as well as posters have been archived at the event website. Materials presented at the BER breakout can also be found on a Confluence page.

This article is a part of the E3SM “Floating Points” Newsletter, to read the full Newsletter check: