Scorpio – Parallel I/O Library

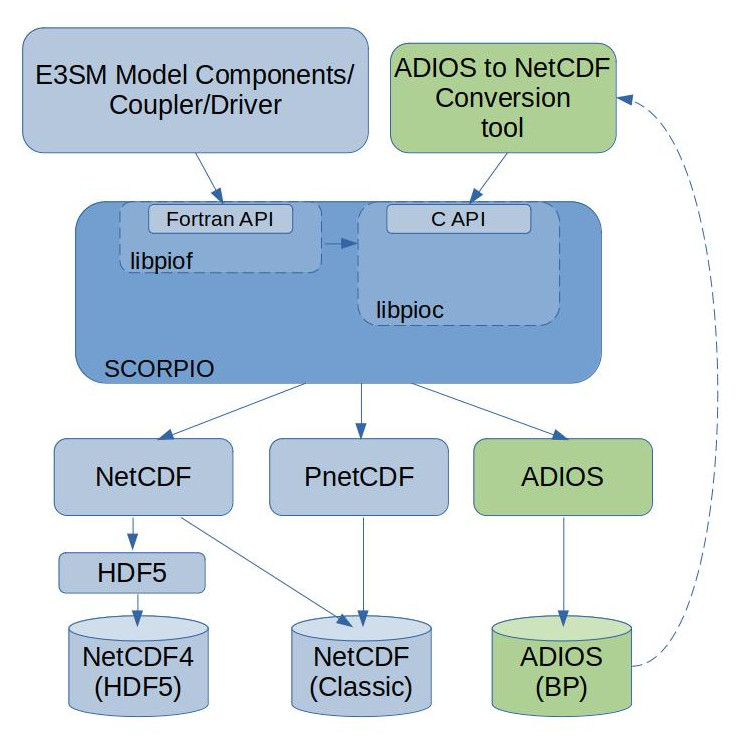

Figure 1. SCORPIO high level architecture showing interactions with external applications and libraries.

The SCORPIO (Software for Caching Output and Reads for Parallel I/O) library is used by all the model components in DOE’s Energy Exascale Earth System Model (E3SM) for reading input data and writing model output (Fig. 1). As complex Earth system models such as E3SM move to higher-resolution, which requires more grid cells to cover the Earth, outputting all of the data from each grid cell as the model runs can make model input/output (I/O) performance a key issue. The challenge is significant enough that the E3SM project has developed tools, such as SCORPIO, to improve I/O performance.

The SCORPIO library provides a portable application programming interface (API) for describing, reading and storing array-oriented data that is distributed in memory across multiple processes. The data in E3SM is not typically decomposed in memory across the compute processes in an “I/O friendly” way. To improve the I/O performance, SCORPIO supports rearranging the data distributed across multiple processes before using low-level I/O libraries like PnetCDF, NetCDF, and ADIOS to write the data to the file system.

SCORPIO is derived from the Parallel I/O library (PIO) and supports advanced caching and data rearrangement algorithms while maintaining the same application programming interface. The older version of the library implemented in Fortran is referred to as “SCORPIO classic” and the latest version of the library implemented in C is referred to simply as “SCORPIO”.

The SCORPIO library supports writing data into files in the NetCDF file format or the ADIOS BP file format. The library provides a tool to convert files in the ADIOS BP format to NetCDF. The library includes an exhaustive set of tests that is run nightly and the results are reported on Cdash.

Performance

As shown in Figure 2, the performance of SCORPIO was measured using two E3SM simulation configurations on Cori and Summit: a configuration with high resolution atmosphere (F case) and a configuration with high resolution ocean (G case). The high resolution atmosphere F case runs active atmosphere and land models at 1/4 degree (~28 km) resolution, the sea ice model on a regionally refined grid with resolutions ranging from 18 km to 6 km and the runoff model at 1/8 degree resolution. The atmosphere component is the only component that writes output data. In this configuration all output used to re-run or restart a simulation (restart output) is disabled and the component only writes history data, which has been historically shown to have poor I/O performance. A one-day run of this configuration generates two output files with a total size of approximately 20 GB. The high resolution ocean G case runs ocean and sea ice models on a regionally refined grid with resolutions ranging from 18 km to 6 km. To measure the I/O throughput of the ocean model all output from the sea ice component was disabled in this configuration. A one-day run of this configuration generates 80 GB of model output from the ocean model.

Using ADIOS as the low level I/O library, SCORPIO can achieve a write throughput of 4.9 GB/s on Cori and 12.5 GB/s on Summit for the high resolution F case and 18.5 GB/s on Cori and 130 GB/s on Summit for the G case.

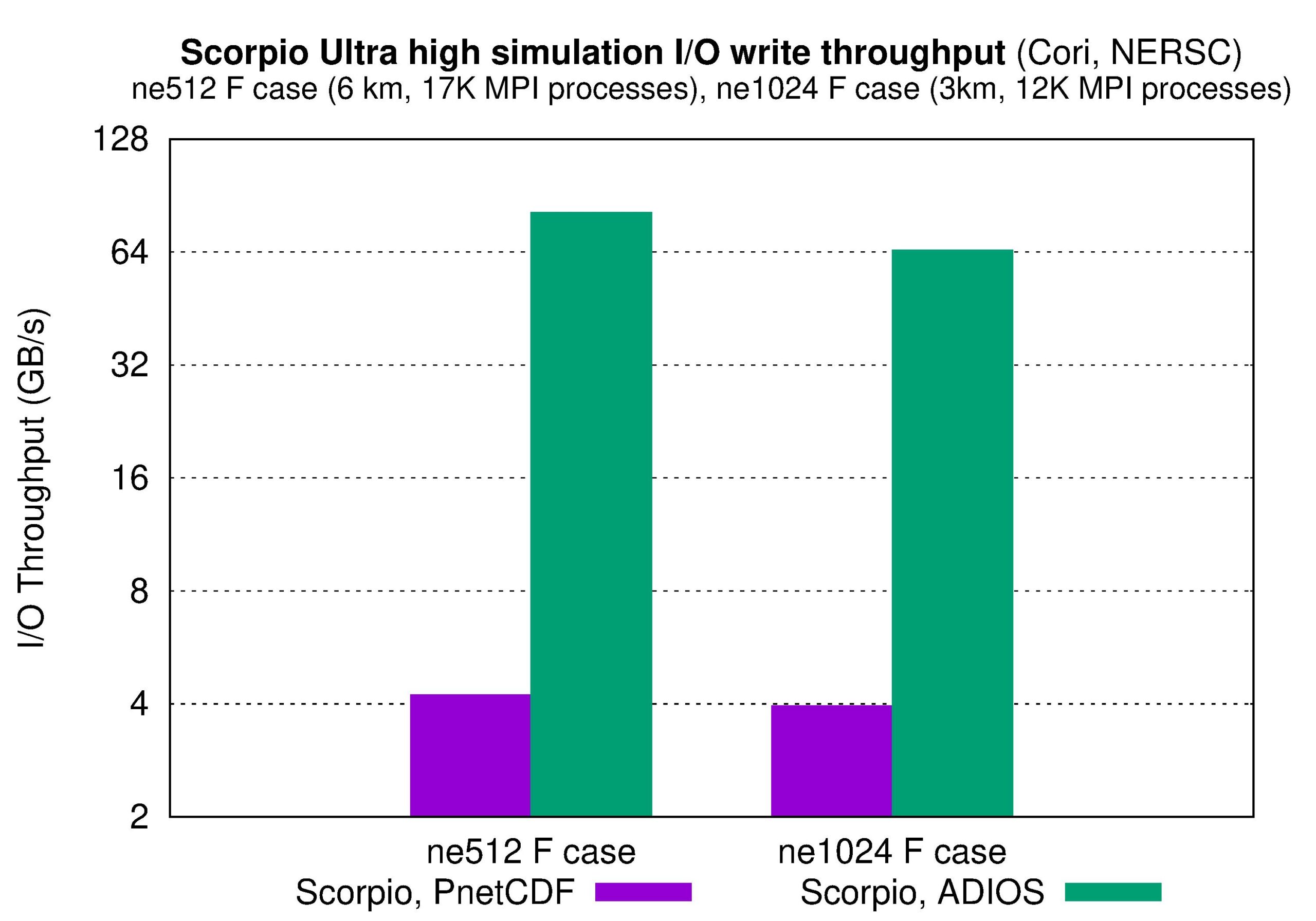

Figure 3. SCORPIO write throughput on Cori for ultra high resolution simulations: ne512 F case and ne1024 F case, which have global resolutions of 6 km and 3 km, respectively.

The enhanced performance of the SCORPIO library, compared to SCORPIO classic, was critical in running E3SM ultra high simulations on Cori. These simulations output multiple terabytes of restart data and could not complete with SCORPIO classic in the maximum time available (due to queue limits) on Cori. The ultra high resolution results are important to climate scientists because many critical climate processes occur at the kilometer (km) scale. Using SCORPIO, E3SM developers are able to write and analyze outputs of these ultra high simulations.

The performance of SCORPIO was measured using two E3SM ultra high resolution atmosphere simulations on Cori: a configuration with 6 km global resolution (ne512 F case) and a configuration with 3 km global resolution (ne1024 F case). The ne512 F case simulations write restart files of about 1.5 TB in size while the ne1024 F case simulations write restart files of about 3 TB in size. As shown in Figure 3, using SCORPIO and ADIOS, Cori can achieve a write throughput of 82 GB/s for the ne512 F case and 65 GB/s for the ne1024 F case. The SCORPIO developers are currently working on measuring the performance of the ultra high resolution simulations on Summit.

Future Work

In the near future, E3SM model developers will be able to use ADIOS to write the model output. Enhancements to the Common Infrastructure for Modeling the Earth (CIME) are currently in progress that will seamlessly convert ADIOS output files to NetCDF. SCORPIO developers are also working on supporting reading data in ADIOS format that will reduce the model initialization time, as well as continuing to work on improving the I/O performance using the NetCDF and PnetCDF libraries.

SCORPIO Link

- SCORPIO, Software for Caching Output and Reads for Parallel I/O, https://github.com/E3SM-Project/scorpio

Other Libraries, Data Formats, and Related Tools

- ADIOS, The Adaptable I/O System, https://csmd.ornl.gov/adios.

- CIME, Common Infrastructure for Modeling the Earth, https://github.com/ESMCI/cime, (http://doi.org/10.5065/WE0D-9K91).

- The HDF Group. Hierarchical Data Format, version 5, 1997-2020. http://www.hdfgroup.org/HDF5.

- NetCDF, Network Common Data Form, https://www.unidata.ucar.edu/software/netcdf, (https://doi.org/10.5065/D6H70CW6).

- PnetCDF, Parallel NetCDF, http://cucis.ece.northwestern.edu/projects/PnetCDF.

References

- Jianwei Li, Wei-keng Liao, Alok Choudhary, Robert Ross, Rajeev Thakur, William Gropp, Rob Latham, Andrew Siegel, Brad Gallagher, and Michael Zingale. Parallel netCDF: A Scientific High-Performance I/O Interface. In the Proceedings of Supercomputing Conference, November, 2003.

- Q. Liu, J. Logan, Y. Tian, H. Abbasi, N. Podhorszki, J-Y. Choi, S. Klasky, R. Tchoua, J. Lofstead, R. Oldfield, M. Parashar, N. Samatova, K. Schwan, A. Shoshani, M. Wolf, K. Wu, W. Yu: Hello ADIOS: the challenges and lessons of developing leadership class I/O frameworks. Concurrency Computat.: Pract. Exper., 26: 1453–1473. 2014. https://doi.org/10.1002/cpe.3125.

Contact

- Jayesh Krishna, Argonne National Laboratory