NCO’s New Lossy Compression Options Squeeze Data Better

Other software can perform lossy compression of the data as well, but the big advantages of using NCO for this purpose are: 1) the correct treatment of missing values (it will not be changed while compressing) and 2) the coordinates and grid variables are treated specially and their full precision is preserved.

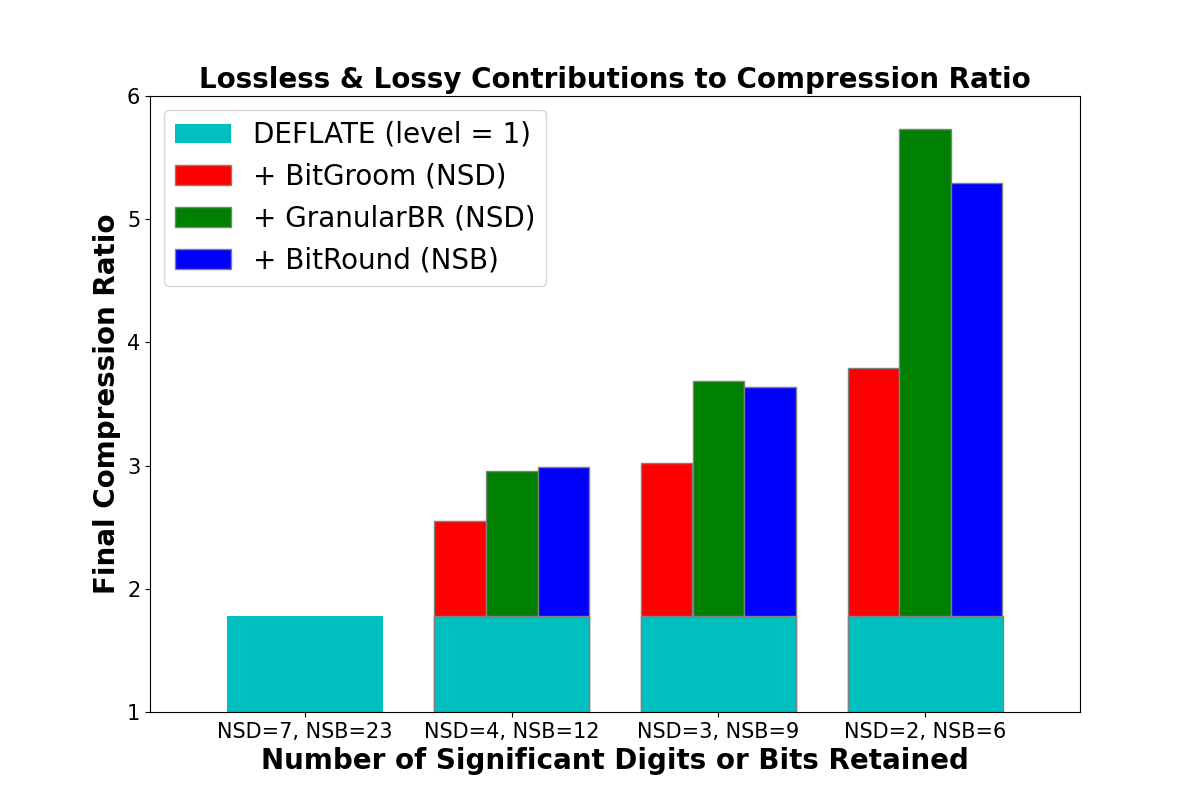

The standard metric for compression efficacy is the compression ratio (CR), defined as the dataset size before- divided by after-compression. If CR=2 the compressed data occupies 50% of its original size, while data with CR=4 occupies 25% of its original size, etc. NCO recently developed and implemented the codec named Granular BitRound which can double or triple the CR of the underlying lossless compressor (Figure 1), and is available in E3SM-Unified 1.6.0. This article shows how to invoke quantization and lossless compression during post-processing with NCO, and summarizes where geoscience-relevant compression options are headed.

Invoking Lossless Compression

E3SM component models by default produce data in a netCDF “classic” format that does not support internal compression. Instead, compression is most often introduced during post-processing along with the necessary conversion to a netCDF4 “enhanced” format that does support internal compression. Many researchers are already comfortable invoking the venerable lossless compression algorithm named DEFLATE (aka GNU Zlib) that the Coupled Model Intercomparison Project version 6 (CMIP6) mandates. The easy way to losslessly compress raw E3SM output (in any netCDF format) with DEFLATE is to invoke NCO to set the output file format to netCDF4 (with -7 or, in longhand, --fl_fmt=netcdf4_classic) and to set the “deflation level” between 1 and 9. Higher levels produce marginally better compression ratios at a considerable expense in time, so --dfl_lvl=1 (or just -L 1 for short) is adequate for most purposes:

ncks -7 -L 1 in.nc out.nc

Figure 1. Compression ratio of E3SM Atmosphere Model (EAM) v2 default monthly dataset of raw size 445 MB compressed with default netCDF lossless compression algorithm (DEFLATE, compression level=1) alone (leftmost), or after pre-filtering with one of three lossy codecs (BitGroom, Granular BitGroom, or BitRound) with quantization increasing (and precision decreasing) to the right. Commands used shown in main text.

Invoking Lossy Compression

Quantization algorithms work as pre-filters, meaning that quantization must be followed by lossless compression to improve the CR. To invoke a quantization pre-filter, specify the Number of Significant Digits (NSD) for the lossy algorithm to preserve using the option --ppc default=NSD. This tells NCO to apply Precision Preserving Compression (PPC) of NSD decimal digits to all floating point variables. E3SM datasets contain mostly single-precision (32-bit) IEEE floating point values that store 6-7 significant digits of precision, so NSD=5 slightly increases the CR, and NSD=1 considerably increases CR (and reduces precision). Double-precision (64-bit) values start with 15 significant digits so lossy compression produces higher CRs for the same NSD. For example, this uses the default quantization algorithm to preserve three significant digits and then compresses with DEFLATE:

ncks -7 -L 1 --ppc default=3 in.nc out.nc

The three most useful quantization codecs are Granular BitRound (the default), BitGroom, and BitRound. All three adjust the least significant bits at the end of floating point values. A more generic name for these types of codecs is thus “Bit Adjustment Algorithms” (BAAs). Use the –baa option to choose non-default codecs:

ncks -7 -L 1 --baa=4 --ppc default=3 in.nc out.nc # Granular BitRound (default)

ncks -7 -L 1 --baa=0 --ppc default=3 in.nc out.nc # BitGroom

ncks -7 -L 1 --baa=8 --ppc default=9 in.nc out.nc # BitRound

The Granular BitRound codec yields the best CR for a given NSD and is therefore the default quantization algorithm in NCO. It improves the CR of its predecessor, BitGroom (Zender, 2016) by about 20% for the same NSD (Figure 1). The trade-off between CR and precision (Silver and Zender, 2017), though, means that BitGroom produces smaller mean biases. BitRound expects the user to supply the Number of Significant Bits (NSB), not the NSD. BitRound allows more fine-grained levels of quantization than NSD-based algorithms since there are roughly 3.2 bits per digit.

Advantages of Compression with NCO

Invoking quantization through NCO codecs has multiple advantages relative to other methods: 1) Correct treatment of missing values. NCO never quantizes a _FillValue. Other codecs might. 2) Full precision for coordinate and grid variables. NCO never quantizes coordinates (e.g., latitude, time) or other grid variables (e.g., area, lat_bnds) so further analysis and regridding accuracy is not compromised. The results shown in Figure 1 include some full precision variables whose reduction of the final CR is practically negligible. 3) Consistency with higher level workflows. ncremap and ncclimo support quantization with identical options, except that for ease-of-use, the ppc option expects only the NSD for the default quantization codec (Granular BitRound), e.g.,

ncclimo -7 -L 1 --ppc=3 -c v2.LR -s 1 -e 100 -i raw -o clm

ncremap -7 -L 1 --ppc=3 -m map.nc in.nc out.nc

Sound Compression Defaults for E3SM

Determining appropriate NSD values involves multiple considerations. The greatest quantization error incurred by a particular NSD is a factor of 0.5 x 10^(-NSD). We recommend starting with a default of NSD=3 so quantized values are within 0.05% of raw values. This is more than adequate for most post-processing needs. Quantization employs unbiased IEEE rounding so statistics (e.g., global means) remain virtually unchanged. To optimize the compression vs. precision trade-off, apply quantization on a per-variable basis by chaining multiple ppc arguments together with hash-marks (#). For example, this sets an NSD=3 default to all variables, with exceptions for variables where more or less precision may be justified:

ncks -7 -L 1 --ppc default=3#PS=7#Q,T=4#CLD.?,CLOUD.?=2 in.nc out.nc

The variable-specific quantization considerations demonstrated here are: 1) Retain higher-precision for surface pressure (PS) if it will be subsequently used to reconstruct the vertical grid and mass fields. 2) Retain somewhat higher precision for tracers that may reasonably vary by many orders of magnitude like water vapor, Q. This helps to avoid round-off error in derived fields such as budgets. 3) Retain fewer than the default for fields that climate models are notoriously poor at predicting, like cloud or aerosol properties and processes (here encompassed by all variables that match the regular expressions CLD.? and CLOUD.?).

Near-Future Enhancements and Compression Speed

This is a year of rapid innovation in geoscientific compression technology. The netCDF library has seamlessly supported only one codec (DEFLATE) for the last fifteen years. At long last the next netCDF version (4.9.0) will contain multiple modern codecs including Granular BitRound, BitRound, and Zstandard (Hartnett et al., 2021). Moreover, this release will include the necessary C and Fortran APIs for models (like E3SM) to compress data with these algorithms at run-time, instead of relying on post-processing tools like NCO. Modern codecs typically write up to 4x times faster and read up to 10x faster than DEFLATE (Hartnett and Zender, 2021), so analyzing data compressed with the newer algorithms can be nearly as speedy as analyzing uncompressed data. Stay tuned for an NCO update that leverages these faster codecs!

Links to the E3SM v2 Code

- Main Page on e3sm.org : NCO

- Users Guide: HTML, PDF

- Code Repository: GitHub – nco/nco: netCDF Operators

- Code Citation: DOI: 10.5281/zenodo.595745

- News post, 2020/07: NCO Supports New Regridding and timeseries features

- News post, 2019/08: New NCO Release Supports Vertical Regridding

- News post, 2019/02: NCO Release 4.7.8

References

- Hartnett, E. J., and C. S. Zender (2021), Additional netCDF Compression Options with the Community Codec Repository (CCR), https://doi.org/10.13140/RG.2.2.13223.37285.

- Hartnett, E. J., C. S. Zender, W. Fisher, D. Heimbigner, H. Lei, B. Curtis, and K. Gerheiser (2021), Quantization and Next-Generation Zlib Compression for Fully Backward-Compatible, Faster, and More Effective Data Compression in NetCDF Files, https://www.researchgate.net/publication/356815021_Quantization_and_Next-Generation_Zlib_Compression_for_Fully_Backward-Compatible_Faster_and_More_Effective_Data_Compression_in_NetCDF_Files

- Silver, J. D. and C. S. Zender (2017), The compression-error trade-off for large gridded data sets, Geosci. Model Dev., 10, 413-423, https://doi.org/10.5194/gmd-10-413-2017.

- Zender, C. S. (2016), Bit Grooming: Statistically accurate precision-preserving quantization with compression, evaluated in the netCDF Operators (NCO, v4.4.8+), Geosci. Model Dev., 9, 3199-3211, https://doi.org/10.5194/gmd-9-3199-2016

Funding

- This research was supported as part of the Energy Exascale Earth System Model (E3SM) project funded by the U.S. Department of Energy, Office of Science, Office of Biological and Environmental Research, through the E3SM’s sub-project “High-Performance Analysis and Regridding Support for E3SM” (LLNL-B641620).

- This material is based upon work supported by the National Science Foundation under Grant No. OAC-2004993.

Contacts

- Charlie Zender, University of California, Irvine